RusVectōrēs: word embeddings for Russian online

tool to explore semantic relations between words in distributional models.

We are against the war started by Russia; this is why the service uses the model trained on Ukrainian Wikipedia and CommonCrawl by default. To choose other models (including Russian), visit Similar Words tab or other tabs.

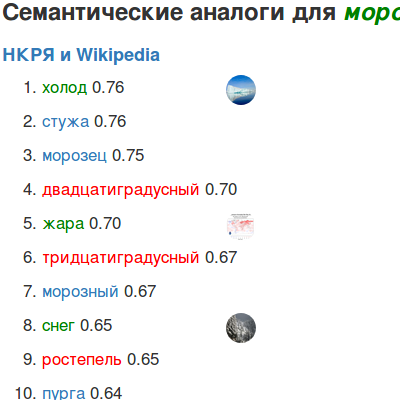

Enter a word to produce a list of its 10 nearest semantic associates:

Project news

- 31/03/2025 — In April 2025, the RusVectōrēs project turns 10! Read our memories and thoughts about this anniversary (in Russian).

- 29/07/2022 — We are against the war and we stand with Ukraine. This is why RusVectōrēs is now switched to using the model trained on the Ukrainian Wikipedia and CommonCrawl by default. You can of course still choose other models in specific tabs or via API.

- 10/12/2021 — New static ruwikiruscorpora_upos_cbow_300_10_2021 model. It was trained on the Russian National Corpus and Russian Wikipedia dump from November 2021. All your favorite covid-19 related neologisms are there!

- 26/08/2021 — The visualisation tab now features PCA plots (in addition to t-SNE). Their advantage is them being deterministic: PCA projection for a given list of words and a given model will always be the same. Also, a bunch of minor bugs is fixed.

- 18/01/2021 — We present a new service which generates context-dependent lexical substitutes from pre-trained ELMo models in real time. You enter a sentence and receive a list of the nearest semantic associates for each word token. It is important that the associates are context-dependent and will be different for the same word in different sentences. This makes it possible to study and demonstrate lexical ambiguity.

- 22/10/2020 — New kids in our model repository! First, we publish two fastText models trained on the GeoWAC: a large modern web corpus of Russian which is geographically balanced. The first model (geowac_lemmas_none_fasttextskipgram_300_5_2020) is trained on lemmas, the second one (geowac_tokens_none_fasttextskipgram_300_5_2020) is trained on raw tokens: we have never had a static model like this on RusVectōrēs before. The lemmatized model is also available for queries via our web interface. Second, we released an ELMo model trained on large Araneum Russicum Maximum corpus (araneum_lemmas_elmo_2048_2020). Finally, to work with pre-trained ELMo models, you can now use our fully packaged Python library simple_elmo.

- 01/09/2020 — In the first day of autumn we invite you to check out our side projects. First, RusNLP is a search engine for papers presented in Russian NLP conferences. Second, ShiftRy is a web service for analyzing diachronic changes in the usage of words in news texts from Russian mass media.

- 31/01/2020 — Large ELMo model trained on the Tayga corpus is published, along with the code to work with such models.

- 22/11/2019 — Along with the word nearest neighbors' lists, we now feature dynamic interactive graphs showing relations between these neighbors (vec2graph library is used).

- 26/08/2019 — New contextualized ELMo models are published: trained on tokens or lemmas, for you to choose.

- 22/04/2019 — Nearest associates lists in all tabs now default to showing only high-frequency and mid-frequency associates. There are frequency checkboxes to change this behaviour: for example, one can turn on showing low-frequency words. This makes it easy to fine-tune the balance between coverage and quality.

- 28/01/2019 — RusVectōrēs tutorial notebook is updated. It shows how to preprocess Russian words in order to look them up in our models, and how to work with the models themselves. It takes into account the changes introduced in 2019.

- 18/01/2019 — New models are released, many significant changes, read in details.

- 21/12/2018 — We published the summary of RusVectōrēs users survey, look at it!

- 27/11/2018 — Your feedback is important! With the help from HSE master students, we ask you to kindly answer a few questions about RusVectōrēs (in Russian).

- 22/09/2018 — We launched the RusVectōrēs bot in the Telegram messenger service; also, the performance of PoS tagger for user queries is improved.

- 20/06/2018 — We added a model trained on the Taiga structured web corpus, presented at the "Dialog-2018" conference.

- 11/05/2018 — We have developed a tutorial, where we explain how text preprocessing is done, how to perform basic operations on word embeddings and how to use the RusVectōrēs API.

- 26/03/2018 — RusVectōrēs models won top rankings in the RUSSE'18 word sense induction shared task. Additionally, check the updated fastText model trained on the Araneum corpus; now it uses not only 3-grams, but also 4-grams and 5-grams.

- 05/01/2018 — fastText models and proper names in the PoS tags: learn about the new features we introduced in 2017.

- 30/06/2017 — We added the model trained on Araneum Russicum Maximum, which is one of the largest Russian web corpora (more than 10 billion words). In addition, all the models are re-evaluated using RuSimLex965 semantic similarity test set (it is more consistent than RuSimLex999).

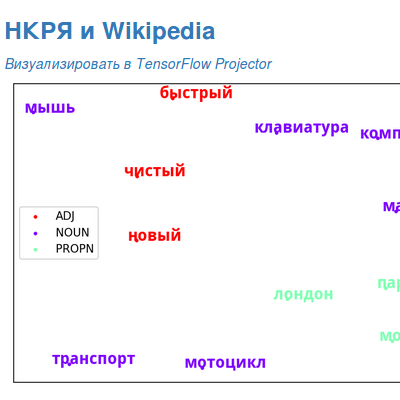

- 30/06/2017 — Visualizations are substantially upgraded. You can now use several word sets as an input: they will be labeled with different colors in the visualizations. If there is only one set, the colors will correspond to the words’ parts of speech. Additionally, it is now possible to visualize your data in TensorFlow Embedding Projector with one mouse click.

- 09/03/2017 — We present a separate page for models where you can download both recent and archived models, and compare them against each other. Also, we added the links to Russian test sets and to the conversion table from Mystem to the Universal PoS Tags.

- 02/02/2017 — Learn about the new features we introduced in 2016 and watch new screencast video on working with RusVectōrēs.

- 02/02/2017 — Major update of the models: news corpus now covers events up until November 2016, Wikipedia dump is updated to the same date. Additionally, PoS tags are converted to the Universal Parts of Speech standard, and the models’ vocabularies now feature multi-word-entities (bigrams).

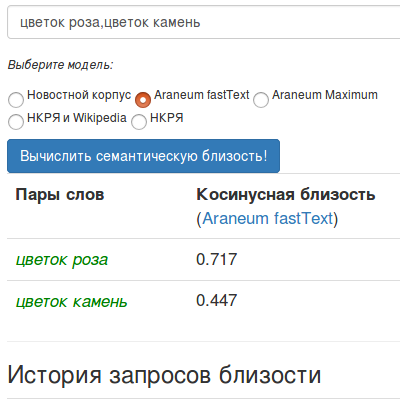

- 18/11/2016 — API now allows to get semantic similarity of word pairs. Query format: https://rusvectores.org/MODEL/WORD1__WORD2/api/similarity/

- 22/10/2016 — There are now hints as you type a query. Note that there are words not covered by hints as well (though they are rare and strange)!

- 01/07/2016 — For security reasons, the option to automatically train models on user-supplied corpora is now disabled. However, if you have an interesting corpus, contact us, and we will be glad to train a model for you.

- 07/04/2016 — RusVectōrēs source code is released on Github as Webvectors framework.

- 04/04/2016 — API now provides output in the json format. Example query — https://rusvectores.org/news/удар/api/json/

- 15/03/2016 — Web service with distributional models for English and Norwegian is launched, based on RusVectōrēs engine.

- 03/02/2016 — We fixed a bug because of which training user models was broken.

- 22/12/2015 — RusVectōrēs 2.0: Christmas Edition is officially released.

- 16/12/2015 — News model is updated. It is now trained on texts up to November 2015.

- 15/12/2015 — In Similar Words one can now filter results with the query part of speech.

- 11/12/2015 — API is implemented. It outputs 10 nearest neighbors for given word and model. There are two possible formats: json and csv. Example: https://rusvectores.org/news/удар/api/json/ or https://rusvectores.org/news/удар/api/csv/